Data governance may not always get the spotlight, but it’s the backbone of every reliable data platform. It keeps sensitive information protected, ensures audits run smoothly, and helps teams work confidently without worrying about compliance gaps.

Over the years, Databricks (DBX) has steadily strengthened this space with Unity Catalog — a single, consistent system that organizes, secures, and governs all your data and AI assets across the entire lakehouse.

And honestly?

It’s helped us a lot.

Unity Catalog already offered:

- A single place to manage permissions

- Row- and column-level security

- Lineage tracking

- Consistent access across notebooks, SQL, workflows, and pipelines

- Secure sharing across teams

- One governance model across all DBX workspaces

In short: it brought clarity to what used to be scattered, inconsistent, and hard to maintain.

But as data grows, chaos grows with it.

Sensitive info shows up in unexpected places. New datasets land every hour. Even the best RBAC setup eventually feels like a spreadsheet from 2004 — too many rows, too many columns, and nobody remembers why half the cells exist.

Unity Catalog now adds three new superpowers:

1. Governed Tags

2. Automated Data Classification

3. Attribute-Based Access Control (ABAC)

Together, they turn governance from manual chores into self-cleaning automation.

To understand how these upgrades work in real life, let’s walk through them using a fictional company — GlobalMart, a fast-growing e-commerce retailer expanding across regions and dealing with a rapidly growing data footprint.

GlobalMart’s journey gives us the perfect lens to explore each feature, how it’s used, and how it transforms governance at scale.

As organizations grow, their data grows even faster — new tables arrive daily, pipelines multiply, and sensitive fields start appearing in places no one anticipated. What began as a neat, manageable environment quickly turns into a sprawling ecosystem with thousands of datasets, each carrying different levels of sensitivity.

This is where traditional governance models start to break.

Manual reviews don’t scale.

Role-based permissions become complicated.

And sensitive data spreads silently across multiple domains.

Imagine GlobalMart, a fast-growing e-commerce retailer expanding across the US, Canada, and Europe.

In 2021, they had 40 tables.

In 2023, they had 400.

By 2025, they had 4,000+ tables across multiple regions, teams, and departments.

As the company grows, sensitive data ends up everywhere--

- Customer emails in 12 different tables

- Phone numbers in marketing datasets

- Salaries in 5 HR tables

- Credit card tokens in payments data

- Addresses in order, returns, and shipping datasets

Like glitter… once it spreads, it’s everywhere.

- The data engineering team manually reviewed every new table.

- Analysts often accidentally got access to sensitive columns.

- Masking rules were applied table by table.

- New datasets landed daily but governance rules lagged behind.

Even with Unity Catalog’s earlier version, governance felt like herding cats.

- GlobalMart defines security based on tags and attributes, not tables.

- Governance happens automatically as data lands.

- No one needs to “remember to lock down” columns anymore.

Governed Tags are like sticky notes with superpowers. You attach a tag to a table or column, and Unity Catalog automatically enforces the rules everywhere that tag appears.

- Tags you can use: PII, Confidential, Finance-Restricted, GDPR, Highly Sensitive

To hide PII from analysts, GlobalMart had to:

❌ Set permissions on each table

❌ Mask the same column again and again

❌ Manually update every time a new table arrived

If 40 tables had customer emails → 40 manual setups.

Now they do it once:

“If a column has tag = PII, mask it for everyone except Compliance.”

Databricks then:

✔ Applies it to every table

✔ Applies it to every new table coming tomorrow

✔ Applies it even if the column moves or gets renamed

✔ Ensures no analyst accidentally sees customer data

- No duplicated permission setups

- Zero room for human forgetting

- Sensitive data is always protected

- Policies scale without effort

> “Hide all PII columns from everyone except the Compliance team.”

Let's talk about GlobalMart again. The company tracks :

- Newsletter signups

- Customer support interactions

- Checkout logs

- Marketing campaigns

- Loyalty program profiles

- Order feedback

Each of these pipelines brings in customer_email fields.

A data engineer (usually unlucky, always tired) had to:

Mask customer_email in customers table

Mask customer_email in marketing_leads table

Mask customer_email in support_tickets table

Mask customer_email in feedback tableAnd when a new table landed?

They had to start over.

If they forgot even one column — boom, compliance issue.

GlobalMart simply:

1. Tags email-like columns with PII

2. Defines a tag-level policy:

> “Mask all PII columns for every user except the Compliance and Security teams.”

- Any table with email, phone_number, address automatically inherits masking

- New datasets are covered instantly

- No manual rules needed

NOTE: Tags need ABAC policies to enforce masking/filtering. Tagging alone doesn’t secure data—workspace permissions and ownership also apply.

Unity Catalog can now scan tables automatically and classify sensitive fields.

Now, As the business is expanding.. data keeps growing and new datasets keep coming in:

- New marketing emails

- Customer support case logs

- Loyalty signup data

- Partner sales feeds

Who remembers where “phone number” or “address” columns are?

GlobalMart needed humans to:

❌ Inspect data manually

❌ Tag columns correctly

❌ Ensure teams didn’t skip tagging

❌ Audit everything again monthly

People missed things.

Sensitive columns got left open.

Compliance teams panicked.

Unity Catalog auto-detects:

- Emails

- Phone numbers

- Names

- Government ID patterns

- Credit card numbers

- Address formats

Then it automatically:

✔ Tags them

✔ Applies policies

✔ Secures access

✔ Masks sensitive fields

No manual inspection. No risk of missing anything.

If UC sees something like:

john.doe@gmail.comIt knows:

✔ It’s an email

✔ It’s PII

✔ It should be masked

✔ Only allowed users should see full value

- Zero dependency on engineers remembering to tag fields

- Fully consistent tagging

- Sensitive info is secure the moment it lands

NOTE: Auto-classification tags fields, but enforcement depends on ABAC policies. Feature is in Public Preview, so behavior may vary.

RBAC (Role-Based Access Control) is great… until it isn’t.

- Roles multiply, exceptions pile up, and temporary access becomes a headache.

As the business expanded for Globalmart, the roles also explode

Over time, GlobalMart created:

- 12 Marketing roles

- 18 Finance roles

- 7 HR roles

- 21 Regional Analyst roles

And odd exceptions:

- “Give Megha access to EU data only this week”

- “Give HR one-time access to payroll analytics”

RBAC became chaos.

Permissions tied to roles meant:

❌ Too many roles

❌ Too many exceptions

❌ Hard to maintain

❌ Slow onboarding

❌ No easy way to manage temporary access

Access is now based on attributes, like:

- User.team = “Marketing”

- User.region = “APAC”

- Data.tag = “PII”

- User.seniority = “Manager”

Example:

Policy:

“Analysts can only see data for their own region.”

So:

- APAC analyst → sees only APAC rows

- EU analyst → sees only EU rows

- Global manager → sees all rows

RBAC = “Who you are”

ABAC = “Who you are + what the data is + your context”

Access is now based on user + data + context attributes:

Instead of giving someone a key to every door (roles), ABAC is like having a smart security system that knows who should enter which rooms, when, and under what conditions.

NOTE: ABAC is in Public Preview and works alongside RBAC, workspace restrictions, and ownership. Custom UDFs may be required for row/column rules.

Read more at - https://docs.databricks.com/aws/en/data-governance/unity-catalog/abac/

Column masking and row filters are now automatic and tag-driven.

GlobalMart stores all customer data in one master table.

But analysts should only see customers from their own country.

Analysts had to manually apply filters as :

WHERE country = 'US' WHERE country = 'Canada'These were prone to mistakes

Unity Catalog applies row filters that use ABAC attributes:

User.country == row.country- US analysts only see US customers

- EU analysts see EU data

- Managers see everything

- No manual filters required

NOTE: Auto-masking/filters rely on ABAC policies and may need UDFs. Requires Databricks Runtime 16.4+; behavior may differ across catalogs.

This upgrade brings real benefits:

✨ Less manual work

✨ Stronger security

✨ Fewer governance mistakes

✨ Easier audits and compliance

✨ Faster onboarding

✨ Policies that scale automatically

✨ Governance that grows with your data

In short, Databricks is moving from:

“Manually secure each table” → “Secure everything based on attributes.”

And that’s exactly where modern data governance needs to be.

New engineers shouldn't learn Docker like they're defusing a bomb. Here's how we created a fear-free learning environment—and cut training time in half." (165 characters)

A complete beginner’s guide to data quality, covering key challenges, real-world examples, and best practices for building trustworthy data.

Explore the power of Databricks Lakehouse, Delta tables, and modern data engineering practices to build reliable, scalable, and high-quality data pipelines."

Ever wonder how Netflix instantly unlocks your account after payment, or how live sports scores update in real-time? It's all thanks to Change Data Capture (CDC). Instead of scanning entire databases repeatedly, CDC tracks exactly what changed. Learn how it works with real examples.

A real-world Terraform war story where a “simple” Azure SQL deployment spirals into seven hard-earned lessons, covering deprecated providers, breaking changes, hidden Azure policies, and why cloud tutorials age fast. A practical, honest read for anyone learning Infrastructure as Code the hard way.

From Excel to Interactive Dashboard: A hands-on journey building a dynamic pricing optimizer. I started with manual calculations in Excel to prove I understood the math, then automated the analysis with a Python pricing engine, and finally created an interactive Streamlit dashboard.

Data doesn’t wait - and neither should your insights. This blog breaks down streaming vs batch processing and shows, step by step, how to process real-time data using Azure Databricks.

A curious moment while shopping on Amazon turns into a deep dive into how Rufus, Amazon’s AI assistant, uses Generative AI, RAG, and semantic search to deliver real-time, accurate answers. This blog breaks down the architecture behind conversational commerce in a simple, story-driven way.

Tired of boring images? Meet the 'Jai & Veeru' of AI! See how combining Claude and Nano Banana Pro creates mind-blowing results for comics, diagrams, and more.

An honest, first-person account of learning dynamic pricing through hands-on Excel analysis. I tackled a real CPG problem : Should FreshJuice implement different prices for weekdays vs weekends across 30 retail stores?

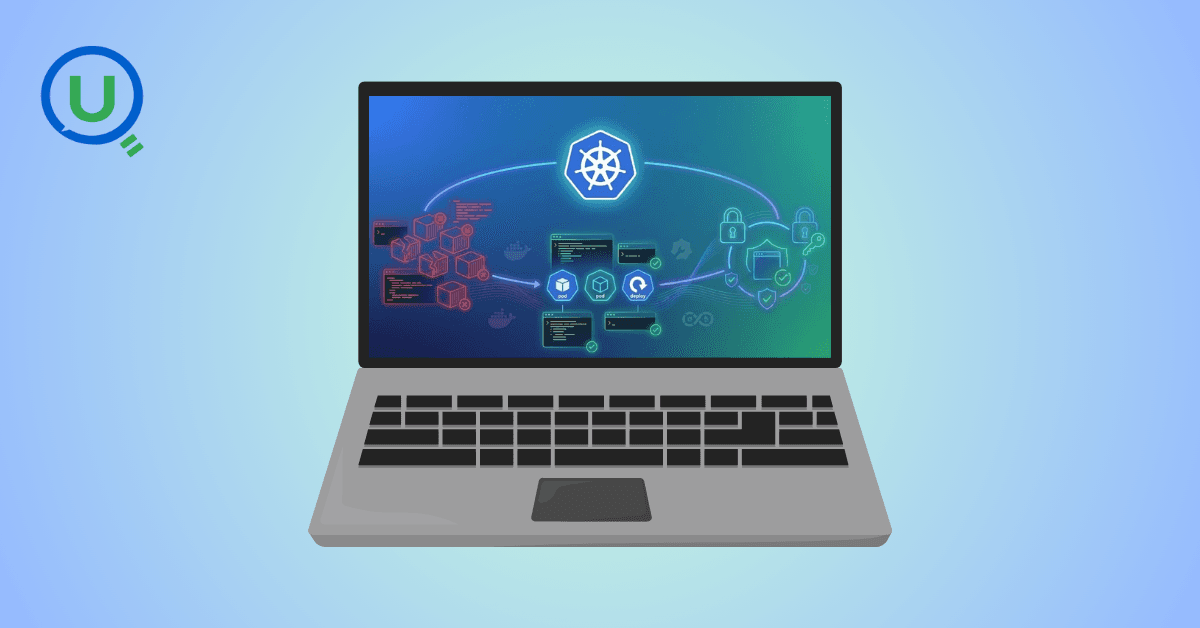

What I thought would be a simple RBAC implementation turned into a comprehensive lesson in Kubernetes deployment. Part 1: Fixing three critical deployment errors. Part 2: Implementing namespace-scoped RBAC security. Real terminal outputs and lessons learned included

This blog walks you through how Databricks Connect completely transforms PySpark development workflow by letting us run Databricks-backed Spark code directly from your local IDE. From setup to debugging to best practices this Blog covers it all.

This blog unpacks how brands like Amazon and Domino’s decide who gets which coupon and why. Learn how simple RFM metrics turn raw purchase data into smart, personalised loyalty offers.

Learn how Snowflake's Query Acceleration Service provides temporary compute bursts for heavy queries without upsizing. Per-second billing, automatic scaling.

A simple ETL job broke into a 5-hour Kubernetes DNS nightmare. This blog walks through the symptoms, the chase, and the surprisingly simple fix.

A data engineer started a large cluster for a short task and couldn’t stop it due to limited permissions, leaving it idle and causing unnecessary cloud costs. This highlights the need for proper access control and auto-termination.

Say goodbye to deployment headaches. Learn how Databricks Asset Bundles keep your pipelines consistent, reproducible, and stress-free—with real-world examples and practical tips for data engineers.

Tracking sensitive data across Snowflake gets overwhelming fast. Learn how object tagging solved my data governance challenges with automated masking, instant PII discovery, and effortless scaling. From manual spreadsheets to systematic control. A practical guide for data professionals.

My first hand experience learning the essential concepts of Dynamic pricing

Running data quality checks on retail sales distribution data

This blog explores my experience with cleaning datasets during the process of performing EDA for analyzing whether geographical attributes impact sales of beverages

Snowflake recommends 100–250 MB files for optimal loading, but why? What happens when you load one large file versus splitting it into smaller chunks? I tested this with real data, and the results were surprising. Click to discover how this simple change can drastically improve loading performance.

Master the bronze layer foundation of medallion architecture with COPY INTO - the command that handles incremental ingestion and schema evolution automatically. No more duplicate data, no more broken pipelines when new columns arrive. Your complete guide to production-ready raw data ingestion

Learn Git and GitHub step by step with this complete guide. From Git basics to branching, merging, push, pull, and resolving merge conflicts—this tutorial helps beginners and developers collaborate like pros.

Discover how data management, governance, and security work together—just like your favorite food delivery app. Learn why these three pillars turn raw data into trusted insights, ensuring trust, compliance, and business growth.

Beginner’s journey in AWS Data Engineering—building a retail data pipeline with S3, Glue, and Athena. Key lessons on permissions, data lakes, and data quality. A hands-on guide for tackling real-world retail datasets.

A simple request to automate Google feedback forms turned into a technical adventure. From API roadblocks to a smart Google Apps Script pivot, discover how we built a seamless system that cut form creation time from 20 minutes to just 2.

Step-by-step journey of setting up end-to-end AKS monitoring with dashboards, alerts, workbooks, and real-world validations on Azure Kubernetes Service.

My learning experience tracing how an app works when browser is refreshed

Demonstrates the power of AI assisted development to build an end-to-end application grounds up

A hands-on learning journey of building a login and sign-up system from scratch using React, Node.js, Express, and PostgreSQL. Covers real-world challenges, backend integration, password security, and key full-stack development lessons for beginners.

This is the first in a five-part series detailing my experience implementing advanced data engineering solutions with Databricks on Google Cloud Platform. The series covers schema evolution, incremental loading, and orchestration of a robust ELT pipeline.

Discover the 7 major stages of the data engineering lifecycle, from data collection to storage and analysis. Learn the key processes, tools, and best practices that ensure a seamless and efficient data flow, supporting scalable and reliable data systems.

This blog is troubleshooting adventure which navigates networking quirks, uncovers why cluster couldn’t reach PyPI, and find the real fix—without starting from scratch.

Explore query scanning can be optimized from 9.78 MB down to just 3.95 MB using table partitioning. And how to use partitioning, how to decide the right strategy, and the impact it can have on performance and costs.

Dive deeper into query design, optimization techniques, and practical takeaways for BigQuery users.

Wondering when to use a stored procedure vs. a function in SQL? This blog simplifies the differences and helps you choose the right tool for efficient database management and optimized queries.

Discover how BigQuery Omni and BigLake break down data silos, enabling seamless multi-cloud analytics and cost-efficient insights without data movement.

In this article we'll build a motivation towards learning computer vision by solving a real world problem by hand along with assistance with chatGPT

This blog explains how Apache Airflow orchestrates tasks like a conductor leading an orchestra, ensuring smooth and efficient workflow management. Using a fun Romeo and Juliet analogy, it shows how Airflow handles timing, dependencies, and errors.

The blog underscores how snapshots and Point-in-Time Restore (PITR) are essential for data protection, offering a universal, cost-effective solution with applications in disaster recovery, testing, and compliance.

The blog contains the journey of ChatGPT, and what are the limitations of ChatGPT, due to which Langchain came into the picture to overcome the limitations and help us to create applications that can solve our real-time queries

This blog simplifies the complex world of data management by exploring two pivotal concepts: Data Lakes and Data Warehouses.

demystifying the concepts of IaaS, PaaS, and SaaS with Microsoft Azure examples

Discover how Azure Data Factory serves as the ultimate tool for data professionals, simplifying and automating data processes

Revolutionizing e-commerce with Azure Cosmos DB, enhancing data management, personalizing recommendations, real-time responsiveness, and gaining valuable insights.

Highlights the benefits and applications of various NoSQL database types, illustrating how they have revolutionized data management for modern businesses.

This blog delves into the capabilities of Calendar Events Automation using App Script.

Dive into the fundamental concepts and phases of ETL, learning how to extract valuable data, transform it into actionable insights, and load it seamlessly into your systems.

An easy to follow guide prepared based on our experience with upskilling thousands of learners in Data Literacy

Teaching a Robot to Recognize Pastries with Neural Networks and artificial intelligence (AI)

Streamlining Storage Management for E-commerce Business by exploring Flat vs. Hierarchical Systems

Figuring out how Cloud help reduce the Total Cost of Ownership of the IT infrastructure

Understand the circumstances which force organizations to start thinking about migration their business to cloud