John, a Junior Data Engineer at the GlobalMart Data Engineering team, started his day with a new task from his lead: "Build the bronze layer to store raw customer data in Delta tables."

Every day, new CSV files containing customer data land in a folder structure like this:

Since the bronze layer is meant to hold raw, untransformed data, John's responsibility was ensuring all incoming files were ingested as-is into a Customers Delta table. But as he started thinking about the task, two critical concerns came to mind:

Incremental Ingestion → How do we ensure that only new files are processed daily without reloading files already ingested?

Schema Evolution → What if tomorrow's file arrives with additional columns? How can the pipeline automatically merge the new schema with the existing table without breaking?

John realized that while the task sounds simple, the solution needs to be scalable, reliable, and future-proof.

Excited but nervous, John searched the internet and found two common techniques: INSERT INTO and INSERT OVERWRITE. But both came with serious limitations:

Simply appends data without preventing duplicates

If the same files are processed again, duplicates will accumulate

Requires manual tracking to avoid reloading old files

Inefficient for incremental loads

Replaces the entire table every time

Causes unnecessary data loss or reprocessing

No flexibility to update only new records

Poor performance when working with large datasets

John realized that relying on either approach could cause major issues for GlobalMart's growing data volumes.

Still searching for a better approach, John discovered COPY INTO, a command built exactly for scenarios like his. It addressed his two key concerns: incremental ingestion and schema evolution.

To get started with his proof of concept, John prepared smaller chunks of the customer dataset and uploaded them to the data lake. The data lake now contained two initial files: customers_01092025.csv and customers_02092025.csv.

Before using COPY INTO, he wanted to validate the data by loading each CSV file individually and checking the record counts.

Before using COPY INTO, he wanted to validate the data by loading each CSV file individually and checking the record counts.

customers_01092025.csv: 5 records

customers_02092025.csv: 6 records

With the files verified, John was ready to test the ingestion. He used the COPY INTO command to load data from the raw zone into a Delta table in the bronze layer:

%sql

COPY INTO gbmart.bronze.customerscopyintotest

FROM '/mnt/mskltestgbmart/copyintotest'

FILEFORMAT = CSV

FORMAT_OPTIONS ('header'='true','inferSchema'='true')

COPY_OPTIONS ('mergeSchema'='true')%sql → Runs the cell in SQL mode inside Databricks

COPY INTO gbmart.bronze.customerscopyintotest → Loads data into the Delta table named customerscopyintotest in the bronze schema of the gbmart database

FROM '/mnt/mskltestgbmart/copyintotest' → Points to the source folder in the data lake where all raw CSV files land

FILEFORMAT = CSV → Defines that input files are in CSV format

FORMAT_OPTIONS ('header'='true','inferSchema'='true'):

header = true → First row contains column names

inferSchema = true → Databricks automatically detects data types (string, int, date, etc.)

COPY_OPTIONS ('mergeSchema'='true') → Ensures schema evolution - if a new file arrives with extra columns, the Delta table automatically adds those columns instead of failing

In simple terms: This command tells Databricks to take all CSV files from the raw folder, read them with headers, automatically detect and merge schema changes, and load only new files into the bronze Delta table.

When John first executed the COPY INTO command, he encountered this error:

Why this happened: COPY INTO requires the destination Delta table to exist beforehand - either with a defined schema or as an empty Delta table.

John created the table first:

%sql

CREATE TABLE IF NOT EXISTS gbmart.bronze.customerscopyintotest;Then re-ran the COPY INTO command:

%sql

COPY INTO gbmart.bronze.customerscopyintotest

FROM 'abfss://test@adlsstoragedata01.dfs.core.windows.net/testcopyinto'

FILEFORMAT = CSV

FORMAT_OPTIONS ('header'='true','inferSchema'='true')

COPY_OPTIONS ('mergeSchema'='true')Result: Success! The output showed 11 records ingested (5 + 6), confirming that both initial files were loaded into the bronze table.

When John runs COPY INTO on a folder path, Databricks automatically scans the directory in the data lake (Azure Data Lake, S3, or ADLS) to discover all files matching the specified format (CSV in this case).

COPY INTO maintains an internal record of which files have already been processed. This means if customers_01092025.csv was loaded yesterday, it won't be reloaded today, preventing duplicates.

When new files like customers_02092025.csv or customers_03092025.csv land in the same folder, COPY INTO automatically picks up only those new files and appends them to the Delta table.

If tomorrow's file includes additional columns (like phone_number), the command seamlessly merges that new schema into the Delta table without breaking the pipeline.

After successfully ingesting the first two files, John uploaded a new file (customers_03092025.csv) to verify incremental behavior.

customers_03092025.csv: 5 records

When he re-ran the same COPY INTO command:

Results:

Previously ingested files were skipped

Only the new file with 5 records was picked up

This confirmed that COPY INTO uses directory listing to scan the folder, identify new files, and load only those while ignoring previously processed files.

Next, John tested a real-world scenario: schema evolution. In practice, incoming files might have additional columns. To simulate this, he added a fourth file (customers_04092025.csv) with additional columns to test how COPY INTO handles schema changes.

The data lake now contained four files, with the latest one having an expanded schema:

customers_04092025.csv: 5 records

New Schema (customers_04092025.csv)

The file contained 5 records with these additional fields:

CustomerID: string

FirstName: string

LastName: string

Email: string

PhoneNumber: double

DateOfBirth: date

RegistrationDate: date

PreferredPaymentMethodID: string

third_party_data_sharing: string

marketing_communication: string

cookies_tracking: string

consent_timestamp: stringPrevious Schema

The original files only had:

CustomerID: string

FirstName: string

LastName: string

Email: string

PhoneNumber: double

DateOfBirth: date

RegistrationDate: date

PreferredPaymentMethodID: stringWhen John ingested the fourth file using the same command (with mergeSchema=true) in FORMAT_OPTIONS, the new columns were successfully merged into the Delta table without errors.

FORMAT_OPTIONS ('mergeSchema'='true') handles schema conflicts during file reading

COPY_OPTIONS ('mergeSchema'='true') handles schema evolution during table writing

Final Result: The table now contained 21 rows with the evolved schema, demonstrating COPY INTO's ability to handle schema changes automatically.

By the end of his proof of concept, John learned important lessons about COPY INTO:

COPY INTO tracks file names, not the data inside files

If the same file is reprocessed, it will be skipped - even if the contents have changed

This is acceptable for the bronze layer since its purpose is to preserve raw files exactly as they arrive

If a file with the same name but different content is placed in the folder, the newer version will be ignored

To ensure all data is captured, each file should have a unique name when landing in the data lake

Consider using timestamps or sequence numbers in file names

Only processes new files, reducing compute costs

No need for complex file tracking mechanisms

Built-in deduplication at the file level

Always create the target table before running COPY INTO

Use mergeSchema=true for dynamic schema handling

Monitor for corrupt or malformed files that might cause failures

Based on John's findings, here are recommendations for production implementation:

File Naming Convention: Use patterns like tablename_YYYYMMDD_HHMMSS.csv to ensure uniqueness

Monitoring: Set up alerts for failed COPY INTO operations

Schema Validation: Consider adding data quality checks after ingestion

Partitioning: For large datasets, consider partitioning the Delta table by date or other relevant columns

Retention Policy: Implement lifecycle management for both raw files and Delta table versions

COPY INTO proved to be the ideal solution for John's bronze layer requirements, providing:

Automatic incremental ingestion without duplicates

Schema evolution support for changing data structures

Simplified operations with minimal maintenance overhead

Cost efficiency by processing only new files

This approach enables GlobalMart to build a robust, scalable data lake foundation that can adapt to changing business requirements while maintaining data integrity and operational efficiency.

New engineers shouldn't learn Docker like they're defusing a bomb. Here's how we created a fear-free learning environment—and cut training time in half." (165 characters)

A complete beginner’s guide to data quality, covering key challenges, real-world examples, and best practices for building trustworthy data.

Explore the power of Databricks Lakehouse, Delta tables, and modern data engineering practices to build reliable, scalable, and high-quality data pipelines."

Ever wonder how Netflix instantly unlocks your account after payment, or how live sports scores update in real-time? It's all thanks to Change Data Capture (CDC). Instead of scanning entire databases repeatedly, CDC tracks exactly what changed. Learn how it works with real examples.

A real-world Terraform war story where a “simple” Azure SQL deployment spirals into seven hard-earned lessons, covering deprecated providers, breaking changes, hidden Azure policies, and why cloud tutorials age fast. A practical, honest read for anyone learning Infrastructure as Code the hard way.

From Excel to Interactive Dashboard: A hands-on journey building a dynamic pricing optimizer. I started with manual calculations in Excel to prove I understood the math, then automated the analysis with a Python pricing engine, and finally created an interactive Streamlit dashboard.

Data doesn’t wait - and neither should your insights. This blog breaks down streaming vs batch processing and shows, step by step, how to process real-time data using Azure Databricks.

A curious moment while shopping on Amazon turns into a deep dive into how Rufus, Amazon’s AI assistant, uses Generative AI, RAG, and semantic search to deliver real-time, accurate answers. This blog breaks down the architecture behind conversational commerce in a simple, story-driven way.

This blog talks about Databricks’ Unity Catalog upgrades -like Governed Tags, Automated Data Classification, and ABAC which make data governance smarter, faster, and more automated.

Tired of boring images? Meet the 'Jai & Veeru' of AI! See how combining Claude and Nano Banana Pro creates mind-blowing results for comics, diagrams, and more.

An honest, first-person account of learning dynamic pricing through hands-on Excel analysis. I tackled a real CPG problem : Should FreshJuice implement different prices for weekdays vs weekends across 30 retail stores?

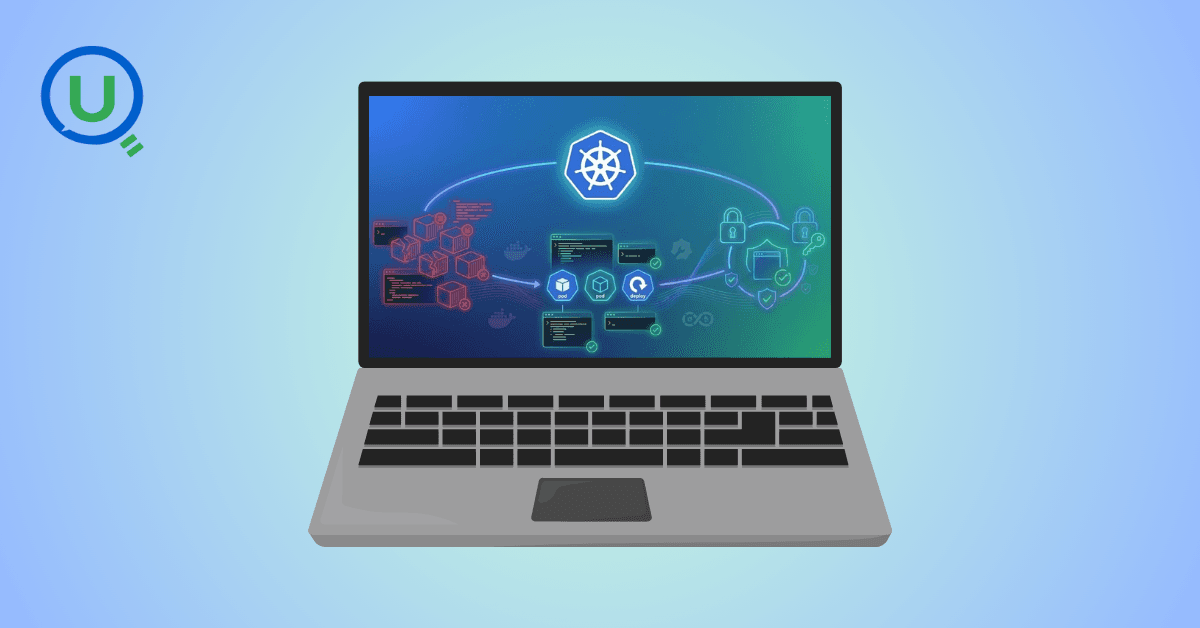

What I thought would be a simple RBAC implementation turned into a comprehensive lesson in Kubernetes deployment. Part 1: Fixing three critical deployment errors. Part 2: Implementing namespace-scoped RBAC security. Real terminal outputs and lessons learned included

This blog walks you through how Databricks Connect completely transforms PySpark development workflow by letting us run Databricks-backed Spark code directly from your local IDE. From setup to debugging to best practices this Blog covers it all.

This blog unpacks how brands like Amazon and Domino’s decide who gets which coupon and why. Learn how simple RFM metrics turn raw purchase data into smart, personalised loyalty offers.

Learn how Snowflake's Query Acceleration Service provides temporary compute bursts for heavy queries without upsizing. Per-second billing, automatic scaling.

A simple ETL job broke into a 5-hour Kubernetes DNS nightmare. This blog walks through the symptoms, the chase, and the surprisingly simple fix.

A data engineer started a large cluster for a short task and couldn’t stop it due to limited permissions, leaving it idle and causing unnecessary cloud costs. This highlights the need for proper access control and auto-termination.

Say goodbye to deployment headaches. Learn how Databricks Asset Bundles keep your pipelines consistent, reproducible, and stress-free—with real-world examples and practical tips for data engineers.

Tracking sensitive data across Snowflake gets overwhelming fast. Learn how object tagging solved my data governance challenges with automated masking, instant PII discovery, and effortless scaling. From manual spreadsheets to systematic control. A practical guide for data professionals.

My first hand experience learning the essential concepts of Dynamic pricing

Running data quality checks on retail sales distribution data

This blog explores my experience with cleaning datasets during the process of performing EDA for analyzing whether geographical attributes impact sales of beverages

Snowflake recommends 100–250 MB files for optimal loading, but why? What happens when you load one large file versus splitting it into smaller chunks? I tested this with real data, and the results were surprising. Click to discover how this simple change can drastically improve loading performance.

Learn Git and GitHub step by step with this complete guide. From Git basics to branching, merging, push, pull, and resolving merge conflicts—this tutorial helps beginners and developers collaborate like pros.

Discover how data management, governance, and security work together—just like your favorite food delivery app. Learn why these three pillars turn raw data into trusted insights, ensuring trust, compliance, and business growth.

Beginner’s journey in AWS Data Engineering—building a retail data pipeline with S3, Glue, and Athena. Key lessons on permissions, data lakes, and data quality. A hands-on guide for tackling real-world retail datasets.

A simple request to automate Google feedback forms turned into a technical adventure. From API roadblocks to a smart Google Apps Script pivot, discover how we built a seamless system that cut form creation time from 20 minutes to just 2.

Step-by-step journey of setting up end-to-end AKS monitoring with dashboards, alerts, workbooks, and real-world validations on Azure Kubernetes Service.

My learning experience tracing how an app works when browser is refreshed

Demonstrates the power of AI assisted development to build an end-to-end application grounds up

A hands-on learning journey of building a login and sign-up system from scratch using React, Node.js, Express, and PostgreSQL. Covers real-world challenges, backend integration, password security, and key full-stack development lessons for beginners.

This is the first in a five-part series detailing my experience implementing advanced data engineering solutions with Databricks on Google Cloud Platform. The series covers schema evolution, incremental loading, and orchestration of a robust ELT pipeline.

Discover the 7 major stages of the data engineering lifecycle, from data collection to storage and analysis. Learn the key processes, tools, and best practices that ensure a seamless and efficient data flow, supporting scalable and reliable data systems.

This blog is troubleshooting adventure which navigates networking quirks, uncovers why cluster couldn’t reach PyPI, and find the real fix—without starting from scratch.

Explore query scanning can be optimized from 9.78 MB down to just 3.95 MB using table partitioning. And how to use partitioning, how to decide the right strategy, and the impact it can have on performance and costs.

Dive deeper into query design, optimization techniques, and practical takeaways for BigQuery users.

Wondering when to use a stored procedure vs. a function in SQL? This blog simplifies the differences and helps you choose the right tool for efficient database management and optimized queries.

Discover how BigQuery Omni and BigLake break down data silos, enabling seamless multi-cloud analytics and cost-efficient insights without data movement.

In this article we'll build a motivation towards learning computer vision by solving a real world problem by hand along with assistance with chatGPT

This blog explains how Apache Airflow orchestrates tasks like a conductor leading an orchestra, ensuring smooth and efficient workflow management. Using a fun Romeo and Juliet analogy, it shows how Airflow handles timing, dependencies, and errors.

The blog underscores how snapshots and Point-in-Time Restore (PITR) are essential for data protection, offering a universal, cost-effective solution with applications in disaster recovery, testing, and compliance.

The blog contains the journey of ChatGPT, and what are the limitations of ChatGPT, due to which Langchain came into the picture to overcome the limitations and help us to create applications that can solve our real-time queries

This blog simplifies the complex world of data management by exploring two pivotal concepts: Data Lakes and Data Warehouses.

demystifying the concepts of IaaS, PaaS, and SaaS with Microsoft Azure examples

Discover how Azure Data Factory serves as the ultimate tool for data professionals, simplifying and automating data processes

Revolutionizing e-commerce with Azure Cosmos DB, enhancing data management, personalizing recommendations, real-time responsiveness, and gaining valuable insights.

Highlights the benefits and applications of various NoSQL database types, illustrating how they have revolutionized data management for modern businesses.

This blog delves into the capabilities of Calendar Events Automation using App Script.

Dive into the fundamental concepts and phases of ETL, learning how to extract valuable data, transform it into actionable insights, and load it seamlessly into your systems.

An easy to follow guide prepared based on our experience with upskilling thousands of learners in Data Literacy

Teaching a Robot to Recognize Pastries with Neural Networks and artificial intelligence (AI)

Streamlining Storage Management for E-commerce Business by exploring Flat vs. Hierarchical Systems

Figuring out how Cloud help reduce the Total Cost of Ownership of the IT infrastructure

Understand the circumstances which force organizations to start thinking about migration their business to cloud